How well can an algorithm solve the NYT Connections game?

to be hidden

Us humans are pretty good noticing the four hidden groupings in a grid of sixteen words in the NYT's Connections game -- at least I am. But I wondered, how could computers do?1

Not great. At least my algorithm isn't. But it's not THAT bad either.

My algorithm gets nine puzzles correct on the first try out of 400 games -- 2.2%. For 20 more (5.0%) it gets two clusters correct and goofs the other two.

Why is connections so hard to get right?

Let's look at one puzzle:

| handful | several | post | planet |

| moon | mellon | comet | pair |

| asteroid | plumb | sun | some |

| journal | few | lyme | globe |

There are 63,063,000 possible solutions. So you can't just guess. If you guessed, it would take 86,000 years worth of puzzles before you have a coinflip's chance to have guessed one right. You need something that understands words and how they relate to each other. That's why I built a little algorithm to measure the "incoherence" of a cluster of four words.

How does the algorithm work?

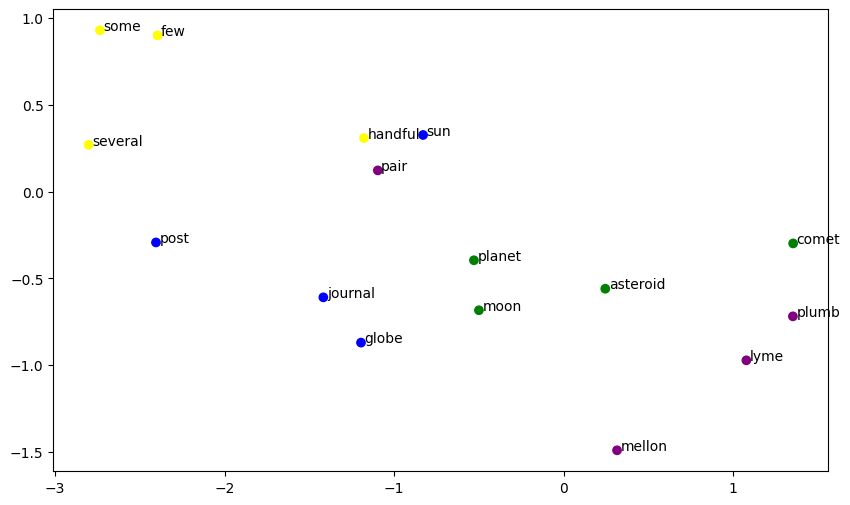

How does GLoVe solve Connections? GloVe is a 2014-era predecessor to today's Large Language Models. All it does is map words to a set of coordinates that represent each word's meaning, where words with similar coordinates have similar meaning. This is like how latitude and longitude represent location on Earth and places with similar coordinates are close to each other.

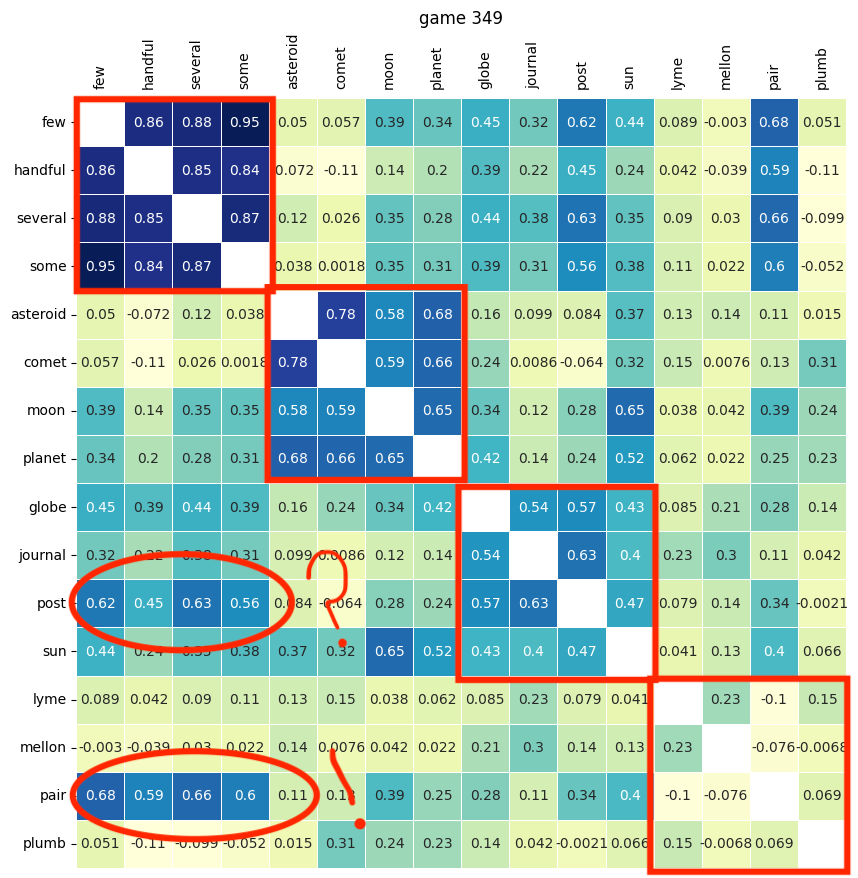

First, we get GloVe's similarity (that is distance) between each word and each other word in the puzzle. That looks like this.

We already know the answer to this puzzle, and you can see that for most of the clusters (in red boxes), the words are similar to each other -- so, the "coherence" is high.

There are a few distractors: pair is similar to few, handful, several and some. That makes sense. But post is too, which I can't explain.

But when we're solving a puzzle, we don't know the right answer yet. To start, my algorithm randomly picks a word, then finds the most similar word to that. Then, it calculates the average of the vectors for those two words, to represent a cluster, then finds the word closest to the cluster. Then it calculates a cluster average again, then finds the word closest to that. Now that we have four words in a cluster given that randomly chosen starting word, we do that for all the words in the puzzle. Then, the cluster whose members are closest, on average, to the center of the cluster is the one that the algorithm "guesses". To finish the puzzle, the algorithm repeats the same process with the remaining words.

My solver just tries to guess the right answer on the first try. I haven't yet asked it to try again if it gets something wrong.

What makes a solution good?

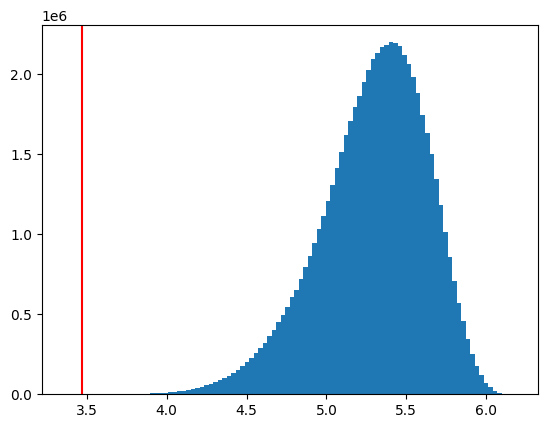

The right answer is almost always one of the least "incoherent" solutions, if you ranked all 63 million possible solutions. But the right answer isn't always the absolute least incoherent. Check out this histogram2.:

The correct answer (the red line) has a very low incoherence score, way on the far left of the histogram. You can't even see it on the chart.

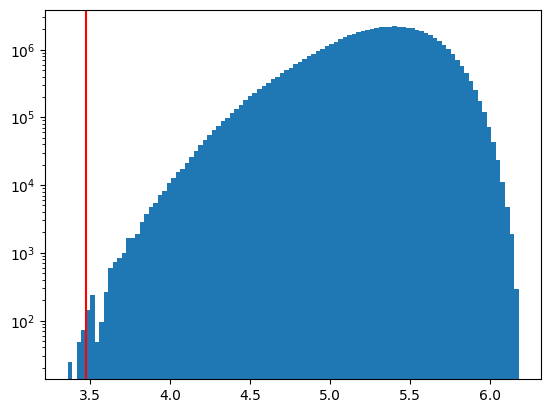

If you look at a log-scale histogram...

you can see the 154 higher-ranking solutions, like this one:

- handful, several, few, some ✅

- moon, asteroid, comet, planet ✅

- mellon, lyme, plumb, journal ❌

- post, sun, globe, pair ❌

The easiest Connections puzzle EVER

#260 from February 26, 2024.

| manner | sour | expression | bitter |

| brave | kind | variety | confront |

| sweet | type | salty | sort |

| meet | face | surreal | romantic |

The hardest Connections puzzle EVER

#150 from November 8, 2023

| flip-flop | russian | wedge | waver |

| mary | see-saw | mule | curly |

| yo-yo | waffle | shoestring | sunscreen |

| towel | breeze | umbrella | hedge |

What puzzles are the easiest?

My algorithm correctly guesses nine games' solution on the first try, out of 400 games.3 All of these games' answers are more coherent than the average game, and seven of them are in the most coherent 25% of all games.

(It also gets two correct answers on the first try on 20 games, or 5.0%.)

I'd be curious to know if these games were the easiest for humans too. Maybe NYT knows?4

The ones it gets right are:

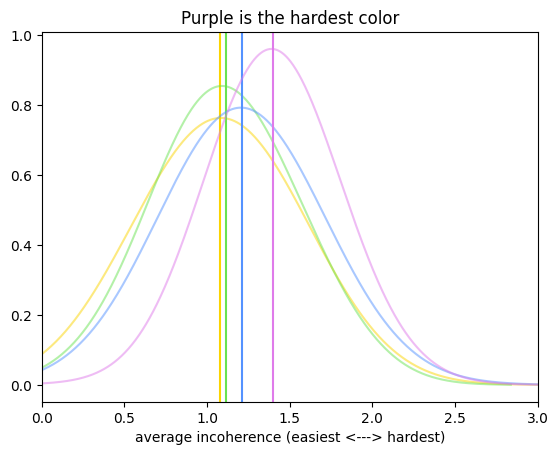

Why is purple so hard in NYT Connections?

Purple really is the hardest color in NYT Connections. According to my analysis of how similar each group's words are, across 400 games, on average, yellow is easiest, then green is a little harder, then blue, then purple is hardest.

Of course, your mileage may vary. These rankings are based on a measurement of how "coherent" a cluster of four words is, based on an early AI tool called GloVe.

Why is purple so hard for computers (and for Jeremys)? 53% of the explanations include a blank or the words "WORDS" or "THINGS". (It's less than 7% for the other categories.)

Is purple the hardest category for you in Connections? Or is another one harder?

Most common words in NYT Connections?

Some words appear again and again. Here they are:

| word | count |

|---|---|

| ring | 12 |

| ball | 11 |

| wing | 10 |

| lead | 10 |

| baby | 9 |

| fly | 9 |

| jack | 9 |

| heart | 9 |

| copy | 9 |

To my surprise, baby appears in purple four out of the nine times it appears overall.

dog (6) and fish (5) are most common in purple, followed by bird, dragon, baby, honey, stick and star (4).

How would an LLM chatbot do?

Poorly! (Answers trimmed.)

Anthropic's Claude gave this answer -- wrong, but confident. One cluster right.

Celestial bodies: planet, moon, comet, asteroid

Earth-related terms: globe, sun, plumb, lyme

Quantities: handful, several, pair, some

Publications: post, journal, mellon, few

The last category might seem a bit odd, but "Post" and "Journal" are common newspaper names, while "Mellon" could refer to the Andrew W. Mellon Foundation which publishes research, and "Few" might be a less common publication name or a tricky misdirect.

Google Gemini

The contender from Mountain View also screws it up, although it's a bit less confident in itself.

Let's Analyze the Words

- Space-related: planet, moon, comet, asteroid

- Quantity/number: handful, several, few, some

- Objects/things: post, plumb, globe, journal

- General terms: pair, mellon, sun, lyme

OpenAI's ChatGPT

ChatGPT made up some new rules for Connections (find one word related to each of the sixteen words). Once I explained them, this is what it guessed.

No, I don't know why it didn't finish group four, and started speaking German instead.

I am charmed that it offered the Elvish word for "friend" as an option.

Group 1: Celestial Bodies - moon - comet - asteroid - sun

Group 2: Units of Measurement - handful - several - pair - few

Group 3: Types of Publications - journal - post - lyme (possibly referring to Lyme disease, which is named after Lyme, Connecticut) - globe

Group 4: Words Related to Plumb - plumb - mellon (likely referring to a Mellon, as in Andrew Mellon, but contextually could relate to "mellon" as in friend in Elvish, from The Lord of the Rings) ? hanno :b Unternehmen . delle

Acknowledgements

Thanks to Wyna Liu for inventing Connections, and to The New York Times for publishing it. Thanks to Swell Garfo / Anthony Kenzo Salazar for publishing the Connections archive. Thanks to the GloVe people and the Gensim people.

-

I mentioned this to my wife, and she said, "That's not the point, babe!" She's right -- as usual -- but I did it anyways. (When we got our marriage license, like all husbands, I promised the government that I'd make the "as usual" joke every time I say my wife is right about something.) ↩

-

This took several days to run. The 63,063,000 possible solutions for each Connections puzzle weigh 9.5 gigabytes on disk! Just calculating the histogram took about six hours of CPU time. An early recursive attempt to generate all the solutions and store them in memory ended up using 63GB of RAM. ↩

-

A variant algorithm using k-means clustering solves three different games correctly on the first try. ↩

-

Back in the day, I could've scraped Twitter for people's scores and analyzed that. Now Twitter's API is more or less deactivated. ↩